记录 spark 读写 mysql 数据库的方式

spark 版本为 2.2.0

spark-shell 交互中连接 mysql

官网的示例

首先来看看官网上的示例:

|

|

可以看到,官网上使用的是 postgresql 数据库作为的示例;mysql 其实也是一样的,下面就来介绍 spark 连接 mysql 的方法:

下载 mysql 相应的 jar 包

可以看到上述连接 postgresql 数据库示例中,使用到了一个 jar 包:postgresql-9.4.1207.jar;相应的 mysql 也需要这样的一个 jar 包,可去官网下载;也可以在这里进行下载。

我下载了两个版本的进行了测试,分别为 mysql-connector-java-5.0.8-bin.jar、mysql-connector-java-6.0.6-bin.jar。

这两个版本都没有问题,下面以 mysql-connector-java-5.0.8-bin.jar 为例。

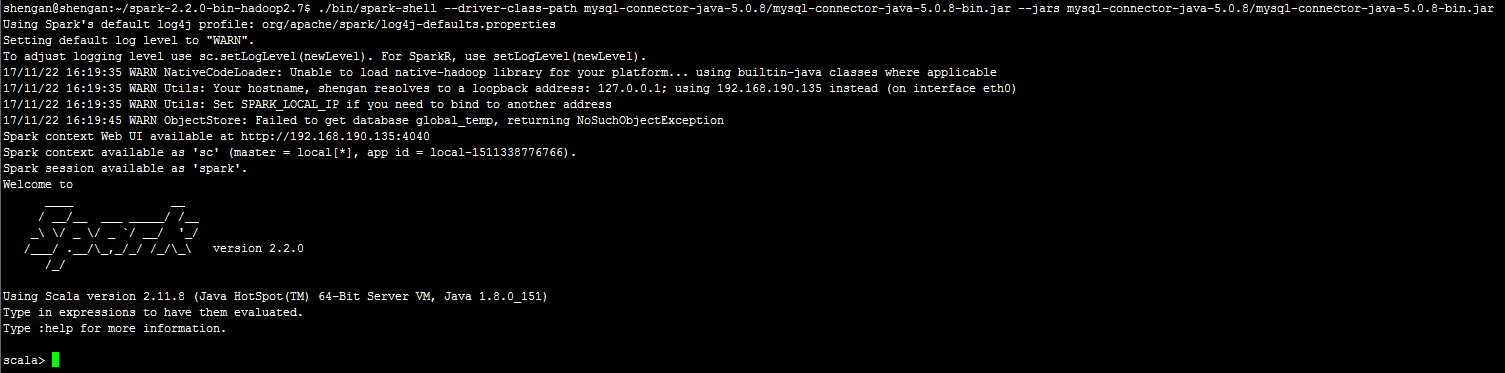

启动 spark-shell

|

|

启动过程中如果报错,则尝试删除 metastore_db/dbex.lck 文件;再次运行即可

https://stackoverflow.com/questions/37442910/spark-shell-startup-errors

|

|

读取 mysql 表数据

https://docs.databricks.com/spark/latest/data-sources/sql-databases.html

|

|

写入数据到 mysql

|

|

命令汇总

总的一套下来,就是如下这些:

spark 程序中连接 mysql

在未使用 mysql 之前,启动命令如下:

要支持 mysql ,启动命令如下:

可以看到,和前面的在 spark-shell 交互中一样,启动时添加了 --driver-class-path 参数和 --jars 参数。

测试了一下,这两参数缺一不可,且必须在前头,还不能放在后面加入。build.sbt 文件并不需要修改。